Setting up new servers is tedious and time-consuming. In this article, I’ll show you how to skip all that, automate the entire process, and provision new servers in a matter of minutes with little to no intervention on your end.

Don’t get me wrong. As a web developer, there’s no better way to understand how web servers work than building your own from scratch. It’s a great learning experience, one that I recommend all WordPress developers undertake. Doing so will give you a greater understanding of the various components required to serve a website, not just the code you write. It can also broaden your knowledge on security and performance topics, which are often overlooked when you’re deep into coding.

However, once you are familiar with the process, setting up new servers is a task that you’re better off automating. Thankfully, you can do this using a tool called Ansible.

Why Ansible?

Ansible is an open-source automation tool for provisioning, application deployment (WordPress deployment in this case), and configuration management. Gone are the days of SSHing into your server to run a command or hacking together bash scripts to semi-automate painful tasks. Whether you’re managing a single server or an entire fleet, Ansible can simplify the process and save you time. So what makes Ansible so great?

Like SpinupWP, Ansible is completely agentless, meaning you don’t have to install any software on your remote servers (aka managed hosts). All commands are run through Ansible via SSH. If Ansible needs updating, you only need to update your single control machine and not any remote hosts. The only prerequisite to running Ansible commands is to have Python installed on your control machine.

Commands you execute via Ansible are idempotent, meaning they can be applied multiple times and will always result in the same outcome. This allows you to safely run multiple hosts without anything being changed unless required. For example, let’s say you need to ensure Nginx is installed on all hosts. Just run one command and Ansible will ensure only those hosts that are missing Nginx will install it. All other hosts will remain untouched.

That’s enough of an introduction. Let’s see Ansible in action.

Installing Ansible

We need to set up a single control machine which we’ll use to execute our commands. I’m going to install Ansible locally on macOS, but any Unix-like platform with Python installed would also work (e.g., Ubuntu, Red Hat, CentOS, etc.). Currently, Ansible requires Python version 3.8 or newer. Windows is not supported at this time.

To install Ansible on macOS, first, install the Python package manager, pip.

curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py

python3 get-pip.py

You may see a Homebrew deprecation warning about “Configuring installation scheme with distutils config files,” which points to this Homebrew issue. The issue details the fact that this is a Python deprecation warning of something that will be removed in Python 3.12, but that a full solution will be implemented before then, so it’s safe to continue.

Then install Ansible using pip.

sudo pip install ansible

Once the installation has completed, you can verify that everything was installed correctly by issuing:

ansible --version

On Linux operating systems, it should be possible to install Ansible via the default package manager. In Ubuntu 21.10 and newer, you can install it using apt.

sudo apt install ansible

However, if you’re running Ubuntu 22.04 LTS, you need to first add the Ansible PPA, before you can install Ansible.

sudo add-apt-repository --yes --update ppa:ansible/ansible

sudo apt update

sudo apt install software-properties-common

sudo apt install ansible

sudo apt install python3-pip python3-pip

sudo pip install passlib

The Ansible docs have detailed instructions for other operating systems.

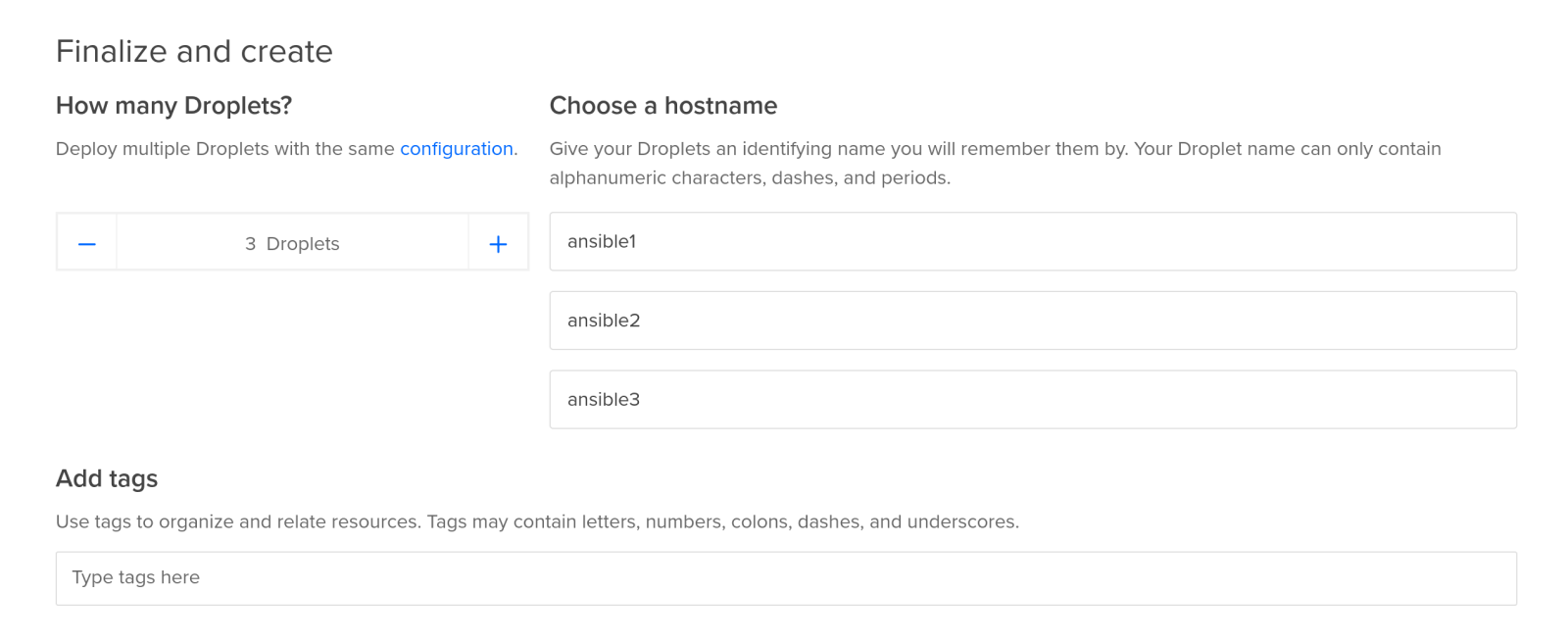

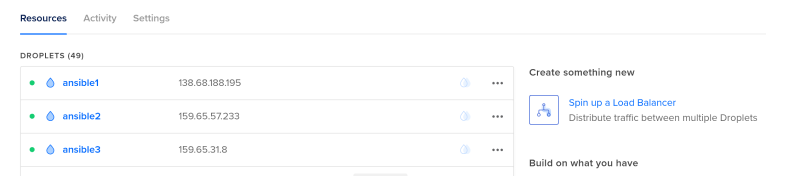

Now that Ansible is set up, we need a few servers to work with. For the purpose of this article, I’m going to fire up three small DigitalOcean droplets with Ubuntu 22.04 LTS x64 installed. I’ve also added my public key so that it will be copied to each host during the droplet creation. This will ensure we can SSH in via Ansible using the root user without providing a password later on.

Once they’ve finished provisioning, you’ll be presented with the IP addresses.

Make sure you have manually SSHed into each droplet as the root user to validate that the ECDSA key fingerprint is valid, and add it to the list of known hosts on your control machine.

ashley@macbook:~$ ssh root@138.68.188.195 The authenticity of host '138.68.188.195 (138.68.188.195)' can't be established. ECDSA key fingerprint is SHA256:xCF0vWr/hG6qz2wSAdQRf1XB7ZdM2lOCnhvh2swe9LY. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Inventory Setup

Ansible uses a simple inventory system to manage your hosts. This allows you to organize hosts into logical groups and negates the need to remember individual IP addresses or domain names. Want to run a command only on your staging servers? No problem. Pass the group name to the CLI command and Ansible will handle the rest.

Next, let’s create our inventory. Before doing so, we need to create a new directory to house our Ansible logic. Anywhere is fine, but I use my home directory.

mkdir ~/wordpress-ansible

The default location for the inventory file is /etc/ansible/hosts. However, we’re going to configure Ansible to use a different hosts file. Create a new plain text file called hosts in the new directory,

cd wordpress-ansible/

nano hosts

with the following contents:

[production]

138.68.188.195

159.65.57.233

159.65.31.8

The first line indicates the group name. The following lines are the servers we provisioned in DigitalOcean. Multiple groups can be created using the [group name] syntax and hosts can belong to multiple groups. For example:

[staging]

139.59.170.69

[production]

138.68.188.195

159.65.57.233

159.65.31.8

[wordpress]

139.59.170.69

139.59.170.70

139.59.170.79

Now we need to configure Ansible to tell it where our hosts file is located. Create a new file called ansible.cfg

nano ansible.cfg

with the following contents.

[defaults]

inventory = hosts

Running Commands

With our inventory file populated we can start running basic commands on the hosts, but first let’s briefly look at modules. Modules are small plugins that are executed on the host and allow you to interact with the remote system as if you were logged in via SSH. Common modules include apt, service, file, and lineinfile. Ansible ships with hundreds of core modules, all of which are maintained by the core development team. Modules greatly simplify the process of running commands on your remote systems and cut down the need to manually write shell or bash scripts. Generally, most Unix commands have an associated module. If not, someone else has probably created one.

Let’s take a look at the ping module, which ensures we can connect to our hosts by returning a “pong” response if successful:

ansible production -m ping -u root

To build the syntax, we provide the group, followed by the module we wish to execute. We also need to provide the remote SSH user (by default, Ansible will attempt to connect using your local user). Assuming everything is set up correctly, you should receive three success responses.

ashley@macbook:~/wordpress-ansible$ ansible production -m ping -u root

138.68.188.195 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

159.65.57.233 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

159.65.31.8 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

You can also run any arbitrary command on the remote hosts using the a flag. For example, to view the available memory on each host:

ansible all -a "free -m" -u root

This time I haven’t provided a group, but instead passed all which will run the command across every host in your inventory file.

ashley@macbook:~/wordpress-ansible$ ansible production -m ping -u root

138.68.188.195 | CHANGED | rc=0 >>

total used free shared buff/cache available

Mem: 976 142 431 0 403 687

Swap: 0 0 0

159.65.57.233 | CHANGED | rc=0 >>

total used free shared buff/cache available

Mem: 976 142 431 0 402 688

Swap: 0 0 0

159.65.31.8 | CHANGED | rc=0 >>

total used free shared buff/cache available

Mem: 976 141 433 0 402 688

Swap: 0 0 0

Already you should start to see how much time Ansible can save you over manually SSHing to your server to run commands, but running single commands on your hosts will only get you so far. Often, you will want to perform a series of sequential actions to fully automate the process of provisioning, deploying, and maintaining your servers. Let’s take a look at playbooks.

Ansible Playbooks

Playbooks allow you to chain commands together, essentially creating a blueprint or set of procedural instructions. Ansible will execute the playbook in sequence and ensure the state of each command is as desired before moving onto the next. If you cancel the playbook execution partway through and restart it later, only the commands that haven’t completed previously will execute. The rest will be skipped.

Playbooks allow you to create truly complex instructions, but if you’re not careful they can quickly become unwieldy. This brings us to roles.

Roles add organization to Ansible playbooks. They allow you to split your complex build instructions into smaller reusable chunks, very much like a class function in OOP programming. This makes it possible to share your roles across different playbooks, without duplicating code. For example, you may have a role for installing Nginx and configuring sensible defaults which can be used across multiple hosting environments.

Provisioning a Modern Hosting Environment on Ubuntu 22.04

For the remainder of this article, I’m going to show you how to put together a playbook based on our How to Install WordPress on Ubuntu 22.04 guide. The provisioning process will take care of the following:

- User setup

- SSH hardening

- Firewall setup

It will also install the following software:

- Nginx

- PHP 8.1

- MySQL

- Redis

- WP-CLI

You can clone the completed playbook from GitHub and follow along, but I will explain how it works below.

Organization

Let’s take a look at how our playbook is organized.

├── ansible.cfg

├── hosts

├── provision.yml

└── roles

└── nginx

├── handlers

└── main.yml

├── tasks

└── main.yml

...

The hosts and ansible.cfg files should be familiar, but let’s take a look at the provision.yml file.

---

- hosts: production

user: root

vars:

username: ansible

password: $6$rlLdG6wd1CT8v7i$7psP8l26lmaPhT3cigoYYXhjG28CtD1ifILq9KzvA0W0TH2Hj4.iO43RkPWgJGIi60Mz0CsxWbRVBSQkAY95W0

public_key: ~/.ssh/id_rsa.pub

roles:

- common

- ufw

- user

- nginx

- php

- mysql

- wp-cli

- ssh

We set the group of hosts from our inventory file, select the user to run the commands, specify a few variables used by our roles, and list the roles to execute. The variables instruct Ansible which user to create on the remote hosts. We provide the username, the hashed sudo password, and the path to our public key. You’ll notice that I’ve included the password here, but for a more secure solution you should look into Ansible Vault. Once each server has been provisioned you will need to SSH in with the specified user, as the root user will be disabled.

The roles mostly map to the tasks we need to perform and the software that needs to be installed. The common role performs simple actions that do not need additional configuration, for example installing Fail2Ban.

Let’s break down the Nginx role to see how roles are put together, as it contains the majority of modules used throughout the remainder of the playbook.

Handlers

Handlers contain logic that should be performed after a module has finished executing, and they work very similarly to notifications or events. For example, when the Nginx configurations have changed, run service nginx reload. It’s important to note that these events are only fired when the module state has changed. If the configuration file didn’t require any updates, Nginx will not be reloaded. Let’s take a look at the Nginx handler file:

---

- name: restart nginx

service:

name: nginx

state: restarted

- name: reload nginx

service:

name: nginx

state: reloaded

You’ll see we have two handlers: One to restart Nginx and one to reload the configuration files.

Tasks

Tasks contain the actual instructions which are to be carried out by the role. Nginx consists of the following steps:

---

- name: Add Nginx repo

apt_repository:

repo: ppa:ondrej/nginx

The first command adds the package repository maintained by Ondřej Surý that includes the latest Nginx stable packages (this is the equivalent of doing add-apt-repository in Ubuntu). Each command is formatted the same way: provide a name, the module we wish to execute, and any additional parameters. In the case of apt_repository, we just pass the repo we wish to add.

Next, we need to install Nginx.

- name: Install Nginx

apt:

name: nginx

state: present

force: yes

update_cache: yes

The command is fairly self-explanatory, but state and update_cache are worth touching upon. The state parameter indicates the desired package state, in our case we want to ensure Nginx is installed, but you could pass latest to ensure that the most current version is installed. Due to adding a new repo in the prior command we also need to ensure we run apt-get update, which the update_cache parameter handles. This will ensure the repo caches are updated so that Nginx pulls from the correct branch.

You’ll definitely need to customize the Nginx configs for whatever you’re hosting, but that’s outside the scope of this article. If you’re hosting WordPress, or really any PHP-based app , I suggest taking a look at our Install WordPress on Ubuntu 22.04 guide and downloading the accompanying Nginx configs:

[convertflow id=”download” class=”cf-6063-area-18943″]

The file module allows us to symlink the default site into the sites-enabled directory, which configures a catch-all virtual host and ensures we only respond to enabled sites. You will also see that we notify the reload nginx handler for the changes to take effect.

- name: Symlink default site

file:

src: /etc/nginx/sites-available/default

dest: /etc/nginx/sites-enabled/default

state: link

notify: reload nginx

Next, we use the lineinfile module to update our Nginx config. We search the /etc/nginx/nginx.conf file for a line beginning with user and replace it with user {{ username }};. The {{ username }} is an Ansible variable that refers to a value in our main provision.yml file.

Finally, we restart Nginx to ensure the new user is used for spawning processes.

- name: Set Nginx user

lineinfile:

dest: /etc/nginx/nginx.conf

regexp: "^user"

line: "user {{ username }};"

state: present

notify: restart nginx

That’s all there is to the Nginx role. Check out the other roles on the repo to get a feel for how they work.

Running the Playbook

To run the playbook run the following command:

ansible-playbook provision.yml

Assuming your hosts file is populated and the hosts are accessible, your servers should begin to provision.

ashley@macbook:~/wordpress-ansible$ ansible-playbook provision.yml PLAY [production] ************************************************************************************************************************************************************* TASK [Gathering Facts] ******************************************************************************************************************************************************** ok: [138.68.188.195] ok: [159.65.57.233] ok: [159.65.31.8] TASK [common : Upgrade packages] ********************************************************************************************************************************************** ok: [159.65.31.8] ok: [159.65.57.233] ok: [138.68.188.195] TASK [common : Install packages] ********************************************************************************************************************************************** changed: [159.65.57.233] changed: [138.68.188.195] changed: [159.65.31.8] TASK [ufw : Enable firewall] ************************************************************************************************************************************************** changed: [159.65.57.233] changed: [159.65.31.8] changed: [138.68.188.195] TASK [ufw : Allow HTTP] ******************************************************************************************************************************************************* changed: [159.65.31.8] changed: [138.68.188.195] changed: [159.65.57.233] TASK [ufw : Allow HTTPS] ****************************************************************************************************************************************************** changed: [138.68.188.195] changed: [159.65.31.8] changed: [159.65.57.233] TASK [ufw : Allow SSH] ******************************************************************************************************************************************************** changed: [159.65.31.8] changed: [138.68.188.195] changed: [159.65.57.233] TASK [user : Ensure sudo group is present] ************************************************************************************************************************************ ok: [138.68.188.195] ok: [159.65.31.8] ok: [159.65.57.233] TASK [user : Ensure sudo group has sudo privileges] *************************************************************************************************************************** changed: [138.68.188.195] changed: [159.65.57.233] changed: [159.65.31.8] TASK [user : Create default user] ********************************************************************************************************************************************* changed: [138.68.188.195] changed: [159.65.57.233] changed: [159.65.31.8] TASK [user : Add authorized key] ********************************************************************************************************************************************** changed: [138.68.188.195] changed: [159.65.57.233] changed: [159.65.31.8] TASK [nginx : Add Nginx repo] ************************************************************************************************************************************************* changed: [159.65.31.8] changed: [159.65.57.233] changed: [138.68.188.195] TASK [nginx : Install Nginx] ************************************************************************************************************************************************** changed: [138.68.188.195] changed: [159.65.31.8] changed: [159.65.57.233] TASK [nginx : Symlink default site] ******************************************************************************************************************************************* ok: [159.65.31.8] ok: [159.65.57.233] ok: [138.68.188.195] TASK [nginx : Set Nginx user] ************************************************************************************************************************************************* changed: [159.65.31.8] changed: [138.68.188.195] changed: [159.65.57.233] TASK [php : Add PHP repo] ***************************************************************************************************************************************************** changed: [138.68.188.195] changed: [159.65.57.233] changed: [159.65.31.8] TASK [php : Install PHP] ****************************************************************************************************************************************************** changed: [138.68.188.195] changed: [159.65.31.8] changed: [159.65.57.233] TASK [php : Set PHP user] ***************************************************************************************************************************************************** changed: [159.65.31.8] changed: [138.68.188.195] changed: [159.65.57.233] TASK [php : Set PHP group] **************************************************************************************************************************************************** changed: [138.68.188.195] changed: [159.65.31.8] changed: [159.65.57.233] TASK [php : Set PHP listen owner] ********************************************************************************************************************************************* changed: [159.65.31.8] changed: [138.68.188.195] changed: [159.65.57.233] TASK [php : Set PHP listen group] ********************************************************************************************************************************************* changed: [159.65.31.8] changed: [138.68.188.195] changed: [159.65.57.233] TASK [php : Set PHP upload max filesize] ************************************************************************************************************************************** changed: [159.65.31.8] changed: [138.68.188.195] changed: [159.65.57.233] TASK [php : Set PHP post max filesize] **************************************************************************************************************************************** changed: [159.65.31.8] changed: [138.68.188.195] changed: [159.65.57.233] TASK [mysql : Install MySQL] ************************************************************************************************************************************************** changed: [159.65.31.8] changed: [159.65.57.233] changed: [138.68.188.195] TASK [wp-cli : Install WP-CLI] ************************************************************************************************************************************************ changed: [138.68.188.195] changed: [159.65.57.233] changed: [159.65.31.8] TASK [wp-cli : Install WP-CLI tab completions] ******************************************************************************************************************************** changed: [159.65.57.233] changed: [159.65.31.8] changed: [138.68.188.195] TASK [ssh : Disable root login] *********************************************************************************************************************************************** changed: [159.65.31.8] changed: [138.68.188.195] changed: [159.65.57.233] TASK [ssh : Disable password authentication] ********************************************************************************************************************************** ok: [159.65.31.8] ok: [159.65.57.233] ok: [138.68.188.195] RUNNING HANDLER [nginx : restart nginx] *************************************************************************************************************************************** changed: [159.65.31.8] changed: [159.65.57.233] changed: [138.68.188.195] RUNNING HANDLER [php : reload php] ******************************************************************************************************************************************** changed: [159.65.31.8] changed: [138.68.188.195] changed: [159.65.57.233] RUNNING HANDLER [php : restart php] ******************************************************************************************************************************************* changed: [159.65.31.8] changed: [138.68.188.195] changed: [159.65.57.233] RUNNING HANDLER [ssh : restart ssh] ******************************************************************************************************************************************* changed: [159.65.31.8] changed: [138.68.188.195] changed: [159.65.57.233] PLAY RECAP ******************************************************************************************************************************************************************** 159.65.31.8 : ok=32 changed=27 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 159.65.57.233 : ok=32 changed=27 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 138.68.188.195 : ok=32 changed=27 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

The process should take roughly 5 minutes to complete all three servers, which is extraordinary when compared to the time it would take to provision them manually. Not only that, but if you’ve configured any of the roles incorrectly, you can fix that run, run the playbook, and any roles that have already been completed will be skipped. Once complete, the servers are ready to house your individual sites and should provide a good level of performance and security out of the box.

Conclusion

Manually running dozens of commands every time you need to add a new site becomes old quickly. You could script it, but that’s a lot of work, and before you know it your scripts are out-of-date. That gets old fast too.

SpinupWP uses Ansible to manage your server, and its scripts are always up-to-date, so you might want to give it a try. It also handles backups.

As I’m sure you can appreciate, Ansible is a very powerful tool and one which can save you a considerable amount of time.

Do you use Ansible for provisioning? What about other tools such as Puppet, Chef or Salt? Let us know in the comments below.