In chapter 3 you learned how to add HTTPS sites to your server, but there is more we can do to improve HTTPS performance and security. We’ll also look at how we can minimize the risks from other types of attacks, such as XSS, Clickjacking, and MIME sniffing.

If you would find it easier to see the whole Nginx config at once, feel free to download the complete Nginx config kit now.

SSL Hardening

Although your site is configured to only handle HTTPS traffic it still allows the client to attempt further HTTP connections. Adding the Strict-Transport-Security header to the server response will ensure all future connections enforce HTTPS. An article by Scott Helme gives a thorough overview of the Strict-Transport-Security header.

Open the main Nginx configuration file.

sudo nano /etc/nginx/nginx.conf

Add the following directive to the http block:

add_header Strict-Transport-Security "max-age=31536000; includeSubdomains";

You may be wondering why the 301 redirect is still needed if this header automatically enforces HTTPS traffic: unfortunately the header isn’t supported by IE10 and below.

Test your Nginx config and if it’s ok, reload.

sudo nginx -t

sudo service nginx reload

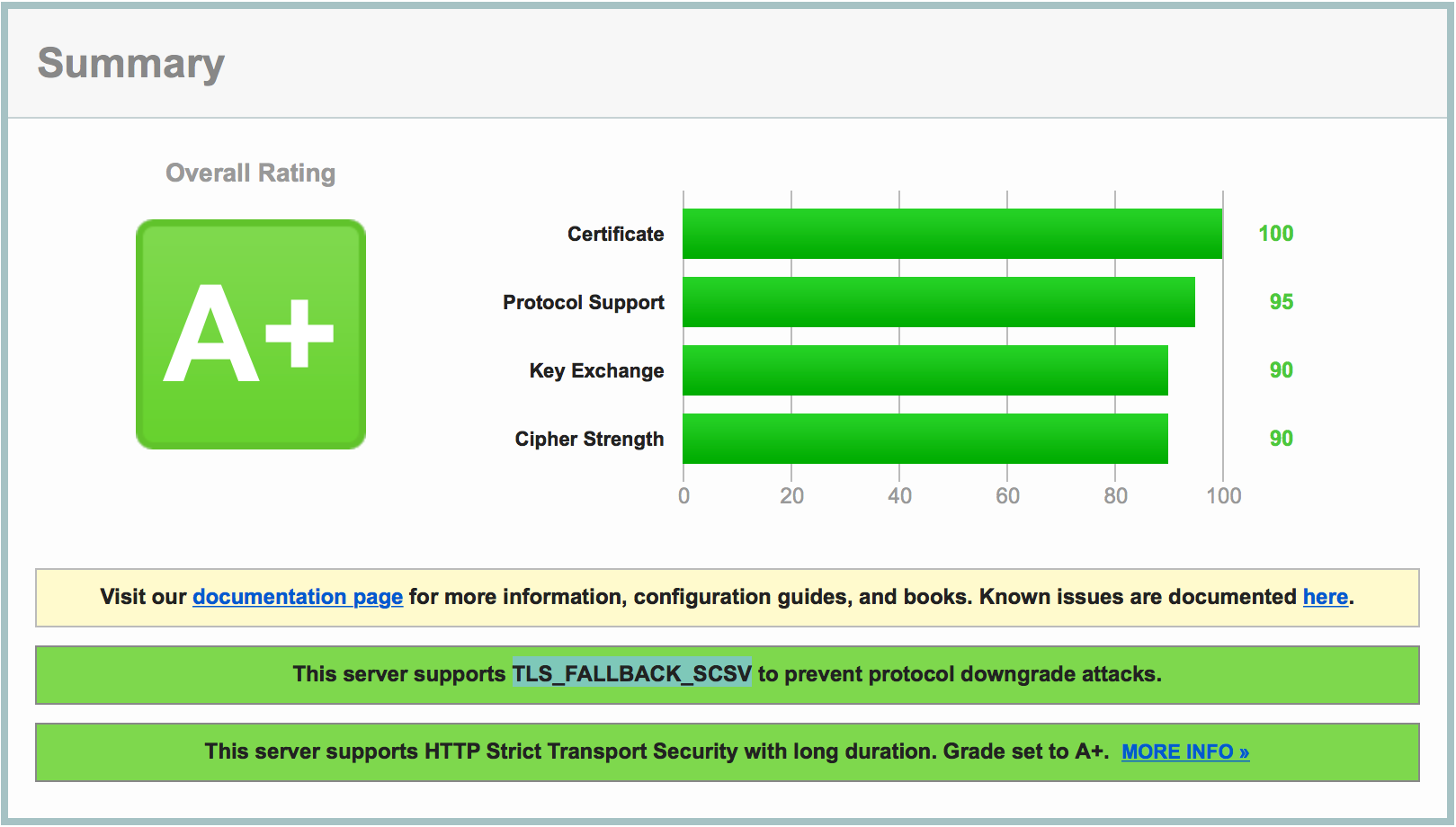

Now if you perform a scan using the Qualys SSL Test tool you should receive a grade A+. Not bad!

SSL Performance

HTTPS connections are a lot more resource hungry than regular HTTP connections. This is due to the additional handshake procedure required when establishing a connection. However, it’s possible to cache the SSL session parameters, which will avoid the SSL handshake altogether for subsequent connections. Just remember that security is the name of the game, so you want clients to re-authenticate often. A happy medium of 10 minutes is usually a good starting point.

Open the main Nginx configuration file.

sudo nano /etc/nginx/nginx.conf

Add the following directives within the http block.

ssl_session_cache shared:SSL:10m;

ssl_session_timeout 10m;

Test the Nginx configuration and reload if successful.

sudo nginx -t

sudo service nginx reload

Cross-Site Scripting (XSS)

The most effective way to deal with XSS is to ensure that you correctly validate and sanitize all user input in your code, including that within the WordPress admin areas. But most input validation and sanitization is out of your control when you consider third-party themes and plugins. You can however reduce the risk of XSS attacks by configuring Nginx to provide a few additional response headers.

Let’s assume an attacker has managed to embed a malicious JS file into the source code of your site, maybe through a comment form or something similar. By default, the browser will unknowingly load this external file and allow its contents to execute. Enter Content Security Policy, which allows you to define a whitelist of sources that are approved to load assets (JS, CSS, etc.). If the script isn’t on the approved list, it doesn’t get loaded.

Creating a Content Security Policy can require some trial and error, as you need to be careful not to block assets that should be loaded such as those provided by Google or other third party vendors. This sample policy will allow the current domain and a few sources from Google and WordPress.org:

default-src 'self' https://*.google-analytics.com https://*.googleapis.com https://*.gstatic.com https://*.gravatar.com https://*.w.org data: 'unsafe-inline' 'unsafe-eval';

Alternatively, some people opt to only block non-HTTPS assets, which although less secure is a lot easier to manage:

default-src 'self' https: data: 'unsafe-inline' 'unsafe-eval';

You can add this directive to nginx.conf or each site’s individual configuration file, depending on whether you want to share the policy across all sites. Personally, I specify a generic policy in the global config file and override it on a per-site basis as needed.

sudo nano /etc/nginx/nginx.conf

Add the following code within the http block:

##

# Security

##

add_header Content-Security-Policy "default-src 'self' https: data: 'unsafe-inline' 'unsafe-eval';" always;

Some of you may have picked up on the fact that this only deals with external assets, but what about inline scripts? There are two ways you can handle this:

- Completely disable inline scripts by removing

'unsafe-inline'and'unsafe-eval'from theContent-Security-Policy. However, this approach can break some third party plugins or themes, so be careful. - Enable

X-Xss-Protectionwhich will instruct the browser to filter through user input and ensure suspicious code isn’t output directly to HTML. Although not bulletproof, it’s a relatively simple countermeasure to implement.

To enable the X-Xss-Protection filter add the following directive below the ‘Content-Security-Policy’ entry:

add_header X-Xss-Protection "1; mode=block" always;

These headers are no replacement for correct validation or sanitization, but they do offer another very strong line of defense against common XSS attacks. Only installing high quality plugins and themes from trusted sources is your best first line of defense.

Clickjacking

Clickjacking is an attack which fools the user into performing an action which they did not intend to, and is commonly achieved through the use of iframes. An article by Troy Hunt has a thorough explanation of clickjacking.

The most effective way to combat this attack vector is to completely disable frame embedding from third party domains. To do this, add the following directive below the X-Xss-Protection header:

add_header X-Frame-Options "SAMEORIGIN" always;

This will prevent all external domains from embedding your site directly into their own through the use of the iframe tag:

<iframe src="http://mydomain.com"></iframe>

MIME Sniffing

MIME sniffing can expose your site to attacks such as “drive-by downloads.” The X-Content-Type-Options header counters this threat by ensuring only the MIME type provided by the server is honored. An article by Microsoft explains MIME sniffing in detail.

To disable MIME sniffing add the following directive:

add_header X-Content-Type-Options "nosniff" always;

Referrer Policy

The Referrer-Policy header allows you to control which information is included in the Referrer header when navigating from pages on your site. While referrer information can be useful, there are cases where you may not want the full URL passed to the destination server, for example, when navigating away from private content (think membership sites).

In fact, since WordPress 4.9 any requests from the WordPress dashboard will automatically send a blank referrer header to any external destinations. Doing so makes it impossible to track these requests when navigating away from your site (from within the WordPress dashboard), which helps to prevent broadcasting the fact that your site is running on WordPress by not passing /wp-admin to external domains.

We can take this a step further by restricting the referrer information for all pages on our site, not just the WordPress dashboard. A common approach is to pass only the domain to the destination server, so instead of:

https://myawesomesite.com/top-secret-url

The destination would receive:

https://myawesomesite.com

You can achieve this using the following policy:

add_header Referrer-Policy "origin-when-cross-origin" always;

A full list of available policies can be found over at MDN.

Permissions Policy

The Permissions-Policy header allows a site to enable and disable certain browser features and APIs. This allows you to manage which features can be used on your own pages and anything that you embed.

A Permissions Policy works by specifying a directive and an allowlist. The directive is the name of the feature you want to control and the allowlist is a list of origins that are allowed to use the specified feature. MDN has a full list of available directives and allowlist values. Each directive has its own default allowlist, which will be the default behavior if they are not explicitly listed in a policy.

You can specify several features at the same time by using a comma-separated list of policies. In the following example, we allow geolocation across all contexts, we restrict the camera to the current page and the specified domain, and we block the microphone across all contexts:

add_header Permissions-Policy "geolocation=*, camera=(self 'https://example.com'), microphone=()";

Download the complete set of Nginx config files including these security directives.

That’s all of the suggested security headers implemented. Save and close the file by hitting CTRL X followed by Y. Before reloading the Nginx configuration, ensure there are no syntax errors.

sudo nginx -t

If no errors are shown, reload the configuration.

sudo service nginx reload

After reloading your site you may see a few console errors related to external assets. If so, adjust your Content-Security-Policy as required.

You can confirm the status of your site’s security headers using SecurityHeaders.io, which is an excellent free resource created by Scott Helme. This, in conjunction with the SSL Server Test by Qualys SSL Labs, should give you a good insight into your site’s security.

That concludes this chapter. In the next chapter we’ll move a WordPress site from one server to another with minimal downtime.