When it comes to fast loading WordPress sites, caching is crucial. A well-optimized page cache can dramatically improve load time for visitors and reduce the load on your server. It’s a win-win situation! However, not all page caching solutions are equal. A simple plugin search for “caching” on WordPress.org will return thousands of results. But WordPress caching plugins aren’t the only options available when it comes to page caching your site. In fact, WordPress caching plugins often don’t perform as well as a server-based solution such as Varnish or Nginx FastCGI.

In this post we’re going to compare Varnish vs Nginx FastCGI caching to see which will come out on top. While we’re at it, we’ll also benchmark WordPress without caching enabled and throw a WordPress caching plugin into the mix for good measure. I’ve opted for Simple Cache, because, as the name suggests, it’s simple to set up.

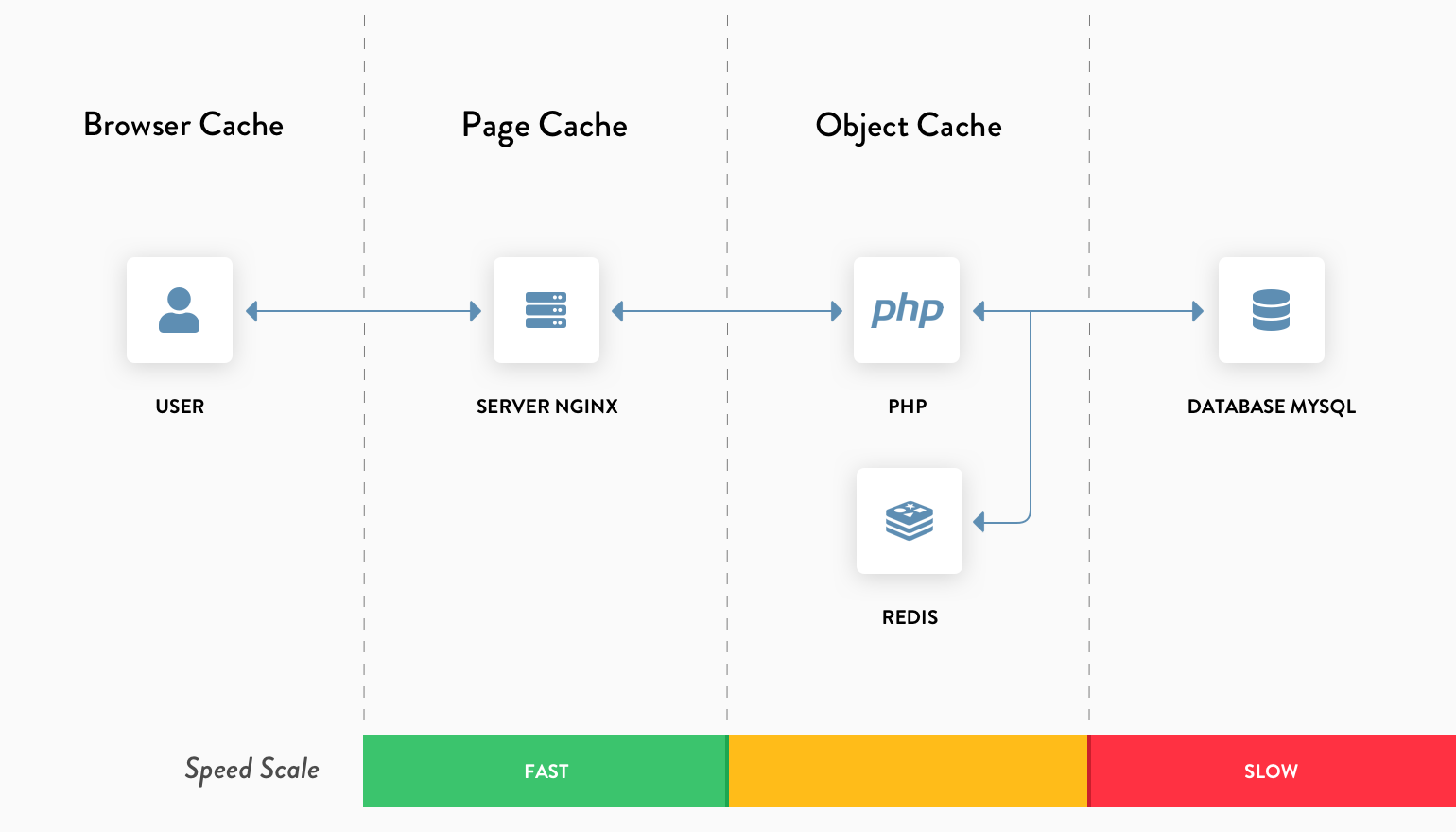

If you followed along with our Install WordPress on Ubuntu 22.04 series, you’re probably familiar with the stack but here’s a diagram as a refresher:

Varnish vs Nginx

Before we get to work and dive in to benchmarking, let’s review each technology we’re going to compare and why we would use them for caching.

Varnish Cache is an open source frontend accelerator for the web or caching reverse proxy. It’s installed in front of your web server that handles HTTP requests and set up to cache the contents of responses. It’s also designed to be very fast and, according to the official documentation, can speed up delivery times of content by 300 to 1000 times, depending on the architecture.

Nginx’s primary function is to act as a web server. It can handle reverse proxying, caching, media streaming and even act as a load balancer among other things. Nginx has grown over the years, starting out as a simple web server designed for maximum stability and performance. Today, we’ll be configuring ours as a web server that passes requests to PHP-FPM. FastCGI (the protocol used to communicate between Nginx and PHP-FPM) caching will be configured to cache responses from PHP-FPM as static HTML files, which Nginx can directly serve on subsequent requests.

As you can see, they’re not exactly the same in functionality. Varnish is designed specifically around caching while Nginx is a web server with the ability to use caching tucked in under the hood. While Nginx doesn’t directly rely on anything else, Varnish does require a web server like Apache or Nginx to function.

How Do We Benchmark Caching?

ApacheBench is a CLI tool designed by the Apache Software Foundation and was originally created for testing how well Apache performs.

All of the tests in this article will be performed using the same options, except for testing WordPress with no cache configured. The reason for this is because sending that many concurrent requests directly to PHP and MySQL will do nothing but increase the average response time, which isn’t a fair representation of PHP’s performance.

ab -n 10000 -c 100 https://siteunderload.com/

This simulates 10,000 requests, with a concurrency of 100 requests. Meaning, ApacheBench will send a total of 10,000 requests in batches of 100 at a time. For the non-cached version I will use a concurrency of 20 requests.

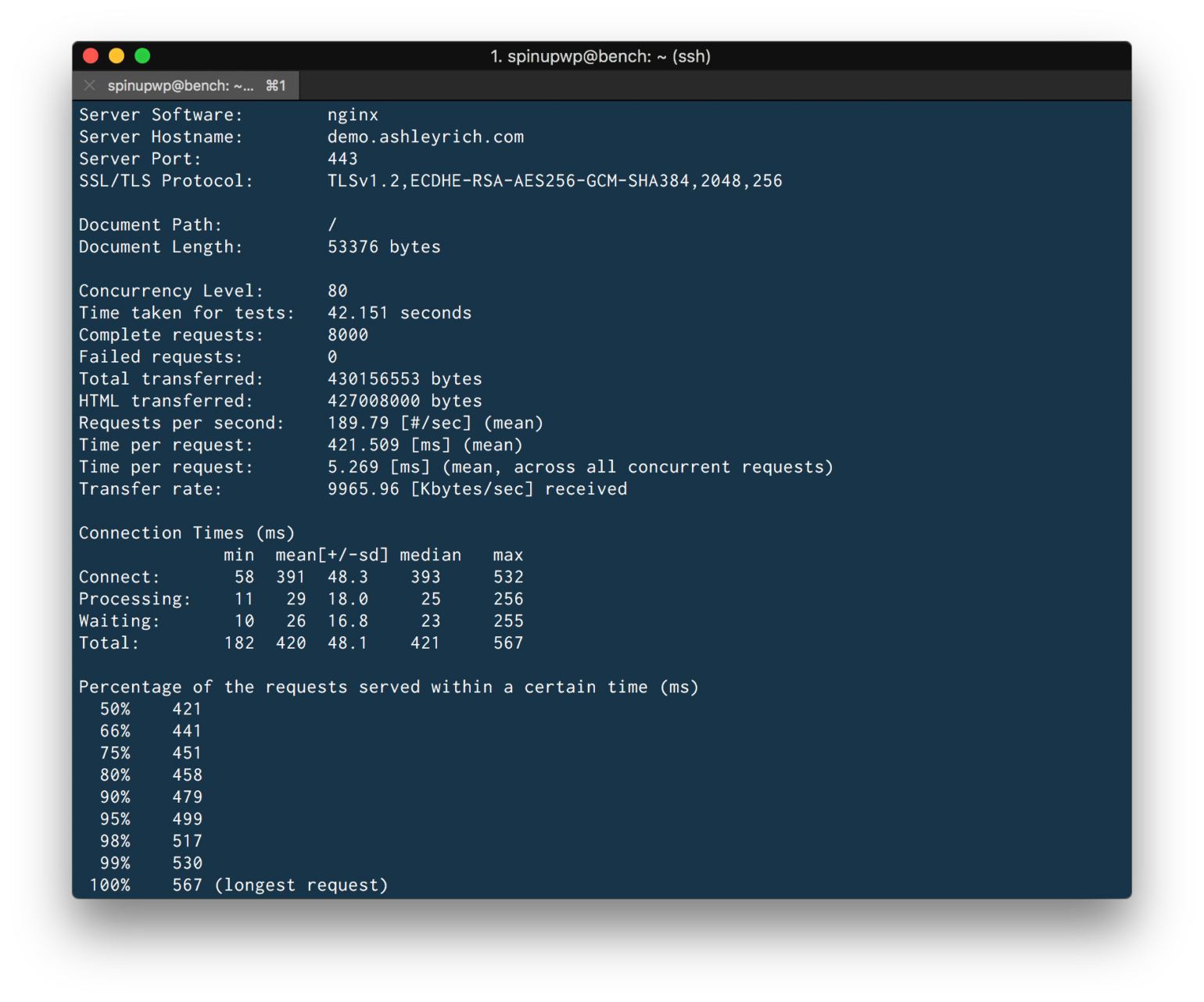

Running ApacheBench will output results similar to below:

In this article we’re mostly concerned with Requests per second and Connection Times. We will perform each test a total of 10 times and use the average for comparison.

We’re also only going to benchmark HTTPS, because every site should be using it at this point for the performance not to mention the security. Nowadays, HTTPS is everywhere thanks mostly to Let’s Encrypt, and that’s a good thing!

The Server Stack

All benchmarks will utilize the same server stack, which consists of the following software:

- DigitalOcean 2GB ($10/mo)

- Ubuntu 20.04

- PHP 7.4.14

- Nginx 1.18.0

- MariaDB 10.4.17

- Varnish 6.2.1

- WordPress 5.6, Twenty Twenty-One

We’ve been using DigitalOcean for awhile but if you’re interested, we also did some testing to find the best server provider for WordPress.

Varnish will be completely disabled when not needed for the current set of benchmarks. Nginx will be used to terminate HTTPS requests, because Varnish is unable to do so. This will result in the following configuration:

Nginx:443 > Varnish:80 > Nginx:8080

Notice we have Nginx acting as a proxy server to terminate HTTPS. There are other proxies available to perform this function, but I’ve opted for Nginx to keep the number of moving parts to a minimum.

We’ll be testing four different scenarios:

- WordPress – No caching

- Simple Cache – Caching via a WordPress plugin

- FastCGI Cache – Nginx

- Varnish – Varnish using Nginx for HTTPS termination

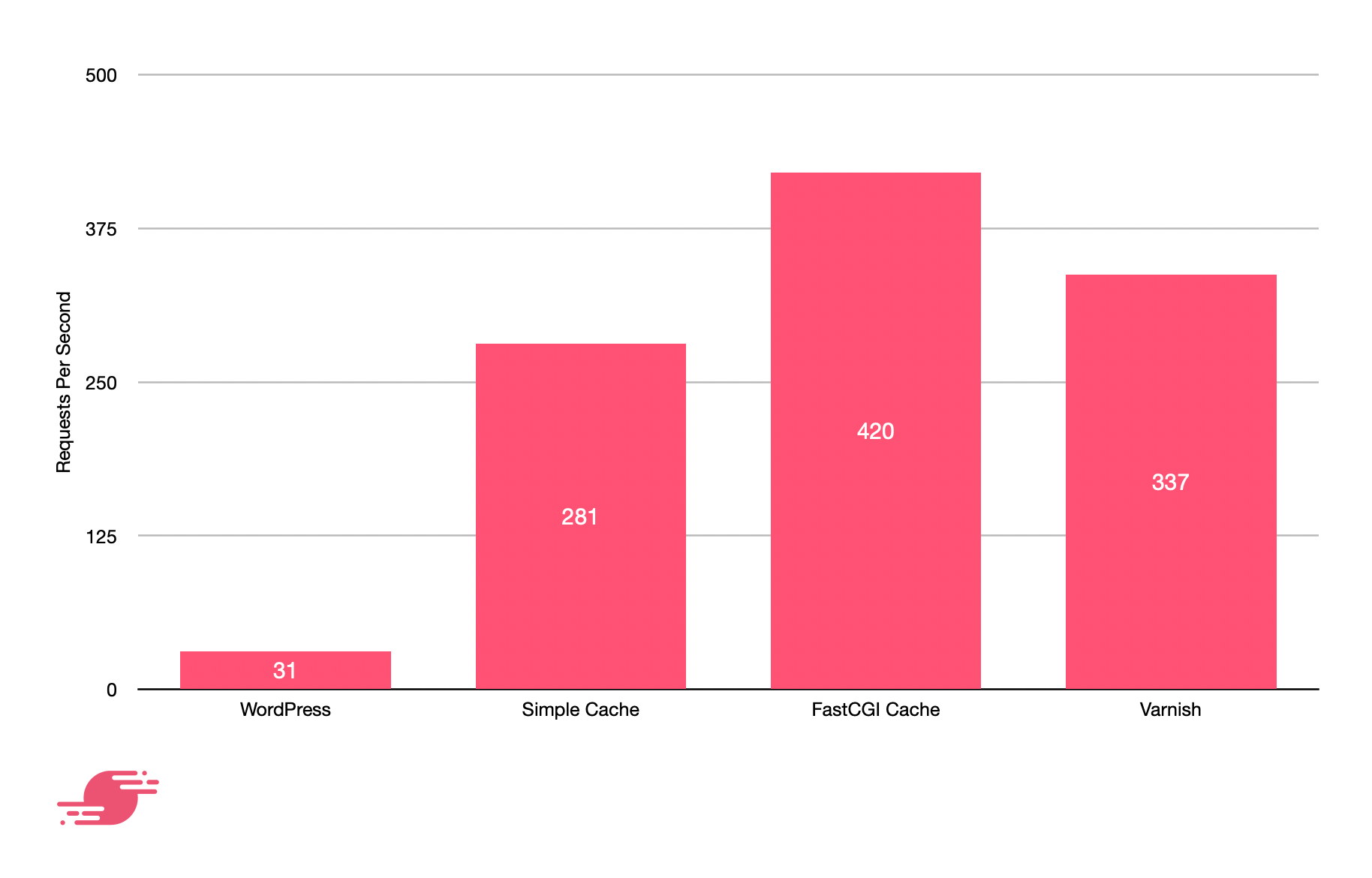

Requests Per Second

As expected, WordPress without caching doesn’t perform well which is due to the bottleneck created by the database server. Simply taking the database server out of the equation by enabling a page cache gives nearly a 10x increase in requests. Varnish improves things further by ensuring requests don’t have to be processed by PHP. However, Varnish is way behind Nginx when it comes to raw throughput (requests per second), likely due to the additional step of Nginx HTTPS termination. Using Nginx alone achieves optimal throughput.

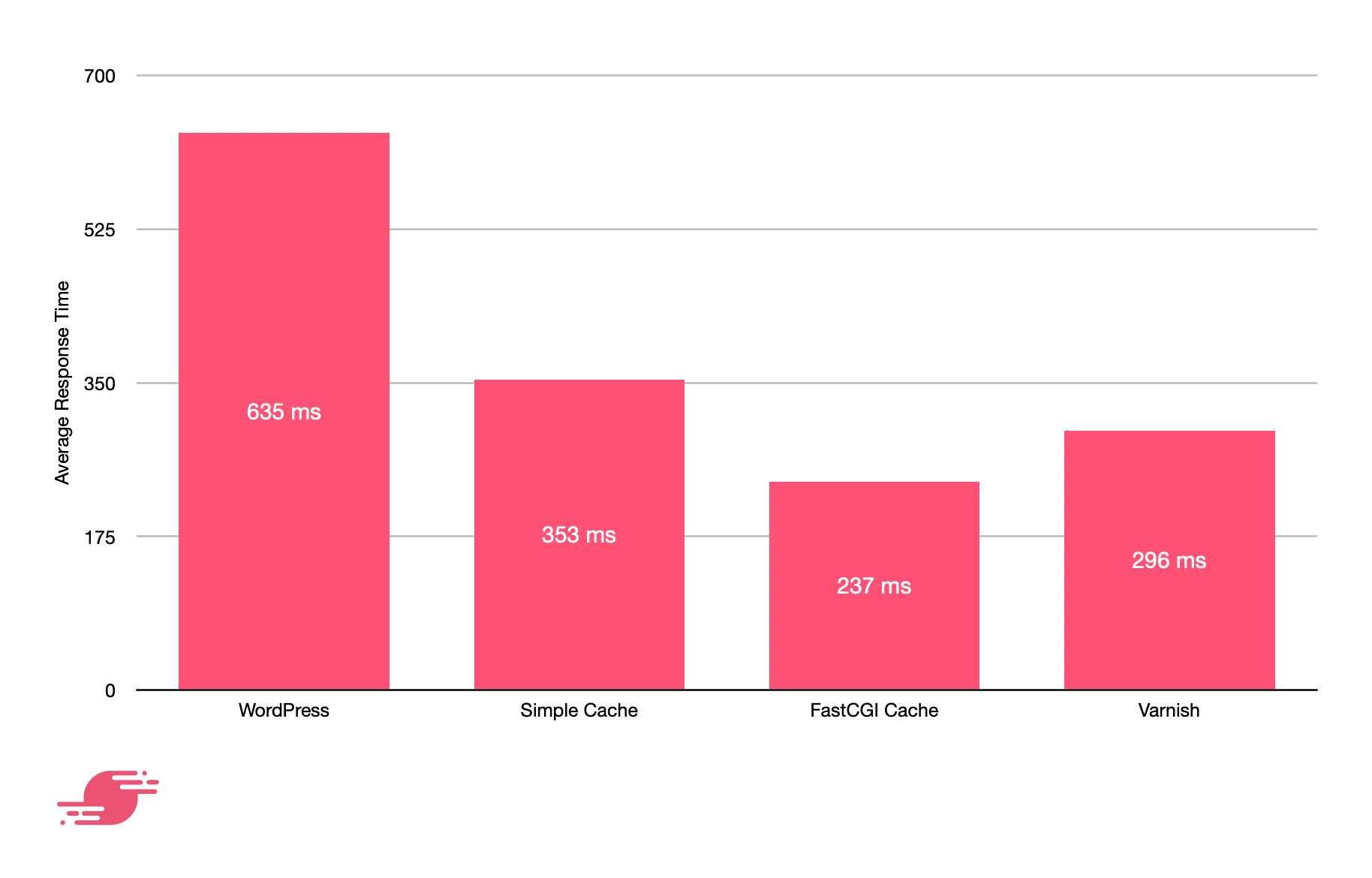

Average Response Time

A high number of requests per second doesn’t mean much if those requests are slow to complete, which is why it’s important to also measure response time. The average response time is the total time it takes for a request to complete.

When a server is under heavy load, the average response time will usually increase. This happens because the server is only able to handle a certain number of concurrent connections usually due to CPU or memory bottlenecks. When this number is exceeded, all additional connections will be queued and processed as resources become available. If those requests are queued for too long, they will time out.

Generally, the fewer moving parts you have in a request lifecycle, the lower the average response time will be. That’s why Nginx FastCGI caching performs so well, because it only has to serve a static file from disk (which will likely be cached in memory due to the Linux Page Cache). A request to a WordPress site with no caching will hit Nginx, PHP and the database server on the backend. With a caching plugin, you only hit Nginx and PHP. With page caching via Nginx FastCGI Cache or Varnish, you only hit Nginx or Varnish.

Final Thoughts

When it comes to comparing Varnish vs Nginx FastCGI Cache, Nginx is the clear winner in outright high performance. It’s not only able to handle more requests per second, but also serves each request 59ms quicker on average.

Performance isn’t the only consideration though, what about ease of configuration? WordPress plugins generally come out on top, excluding W3 Total Cache whose control panel is unwieldy. Although Nginx FastCGI caching is relatively easy to configure, it requires sudo privileges on the server. Varnish on the other hand is far more complex to set up due in part to the requirement for HTTPS termination.

If Varnish isn’t the quickest solution and the most difficult to set up, why on earth would you opt for it? Quite simply, Varnish is still the best at handling more complex cache invalidation rules via Varnish Configuration Language (VCL). However, unless you’re setting up a web application that requires a complex caching structure like an e-commerce platform or serving lots of dynamic content mixed with caching, it’s generally not necessary.

Here at SpinupWP we opt for a longer cache duration (7 days) and purge the entire cache on content update. We’ve found this solution more reliable, versus trying to determine exactly which web page or pages should be purged from the cache, which can get complicated pretty quickly due to post archives and pagination.

With all that taken into consideration, Nginx FastCGI caching is still our preferred solution, which is why we removed Varnish from our server years ago and now rely purely on Nginx. It’s also how we set up every server that’s provisioned via SpinupWP.

What do you use for caching your WordPress sites? Let us know in the comments below.