Hey there, my name’s Jonathan. 👋 I’m the new Developer Educator at Delicious Brains.

When I joined Delicious Brains in March, I had no idea what my first writing task would be. I was a little nervous that it might be something in which I had no previous experience or knowledge. I was pleased to discover that the team had been experimenting with Artificial Intelligence since the start of the year, and it would be my job to document their findings.

What is Artificial Intelligence?

Working in open source and the web often means being involved in the forefront of new technologies. One of the technologies getting a lot of online press is the concept of Artificial Intelligence or AI. If you’re a bit of a sci-fi nerd like me, then the idea of AI is not new to you. AI is when machines or robots demonstrate their own intelligence, which is not bound by their programming. Unlike the natural intelligence displayed by humans and animals, which involves consciousness and emotionality, AI is usually represented by acts of decision-making based purely on facts, even when predicting emotional response.

Now, we’re not yet at a point where we have AI machines that can think like a human, and to be honest, as a programmer, I’m not convinced the concept will ever exist as it does on the silver screen. However, we have seen advances in specific AI types, specifically machine learning and language models, becoming hot topics lately. One such type of AI is OpenAI’s Generative Pre-trained Transformer 3, also known as GPT-3.

The GPT-3 Language Model

GPT-3 is a type of language model that uses machine learning to produce human-like text. You could almost think of it in terms of the Infinite monkey theorem, which states that “a monkey hitting keys at random on a typewriter keyboard for an infinite amount of time will almost surely type any given text, such as the complete works of William Shakespeare.”

Well, imagine that monkey was a powerful computer. Before you gave it a typewriter, you fed it a decades’ worth of content to consume, as well as analytics data for that content, showing which content was more popular and for what reason. It would be safe to assume that the computer would be able to start generating content that would rank well on search engines. That’s essentially what GPT-3 is, a highly trained algorithm that can produce such high-quality content; it’s difficult to distinguish from human-generated text.

At the start of 2021, we created a new “division” that would experiment with these emerging technologies called Delicious Brains Labs. With the beta release of OpenAI’s GPT-3 language model, this was the Labs team’s perfect first project.

Setting This Up

As soon as we could, we requested access to the OpenAI API beta, allowing us to implement the API in our own application. Once we had the access details, we set up a separate DigitalOcean server and created a simple content generation application, which we would use to test the API. The application itself was pretty simple. It allowed anyone to POST data to the API based on its available fields and see the results.

We started by sending it specific questions, phrases, or even entire previous blog introductions, to see what would be returned. The resulting text generated was quite impressive, similar to, and sometimes even better than the article the Guardian published which was GPT-3 AI-generated. As more information was being fed into the AI API by us and others, it improved its content generation capabilities.

We then decided to try something. As the API allows you to hone performance on specific tasks by training on a dataset, we updated our application to have access to our entire back catalog of blog posts, as well as analytics data for how each article performed. Once we had done that, we created a scheduled task on the server to send the application some specific prompts based on our blog post requirements, hoping to create one useful blog post per day. The application was connected to our Google Docs account, with permission to make its content in a specific directory. The Labs team called the application CeeBee, named after the first letters of the term Content Bot, which is essentially what it was.

For the first few days, CeeBee generated content that was useful but still required some manual intervention. So we tweaked the prompts we were sending it. By the end of the first week, we had an article that only needed a few changes but didn’t sound exactly like a human wrote it, so we made a few more tweaks to the application. The following week, things got interesting…

Seeing Results

One day, CeeBee automatically created two new blog posts without being prompted to do so. The first post was still along the same lines as the previous week, but the second was good enough to use. We assumed the scheduled task had been triggered twice somehow but were so extremely happy with the unexpected result; we didn’t think about it further.

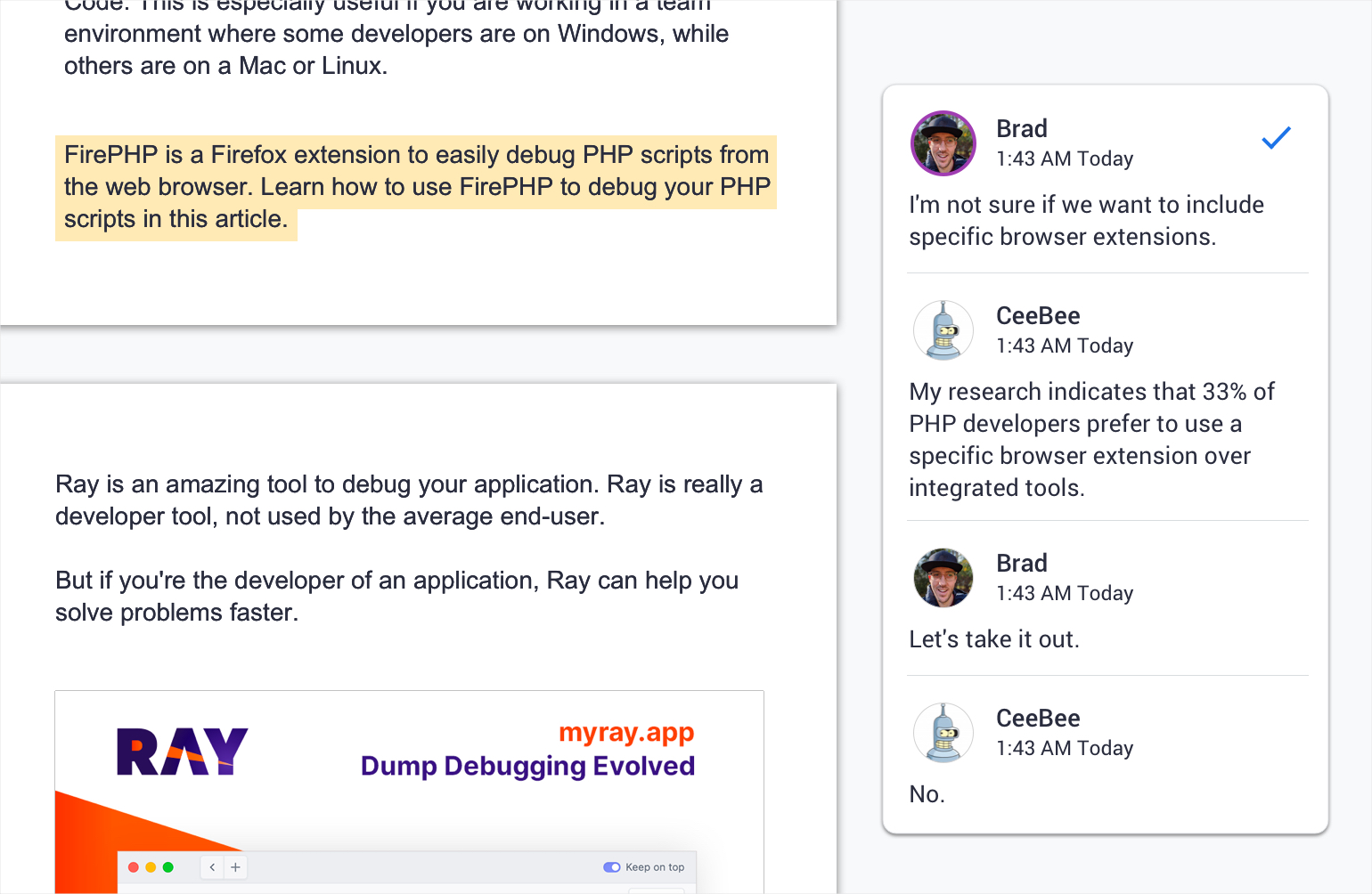

Our usual process is to send an article for review, so we sent it to Brad. As he always does, he reviewed the document and posted his feedback. Generally, he felt it looked good but that the actual topic wasn’t something that made sense for our content calendar, so we shelved it. The following day, CeeBee generated a second article again. This one looked good and made sense to our content calendar, so we also sent it for review. As usual, Brad posted his feedback, but what we didn’t expect was for the bot to automatically reply to his update suggestions, accepting some and making arguments about why others should remain.

We assumed that because CeeBee was connected to the Google Docs account, it somehow accepted Brad’s input as part of its process and generated a response. This was a pretty exciting outcome that we duly documented. Wouldn’t it be amazing if the bot could actually self-edit!?

Things started getting a little weird at this point.

During the process of reviewing the post categories for the Delicious Brains blog, Caillie stumbled across two new categories. One was titled “CeeBeeBot”, the other “Inferior literacy intelligence”. Under the “Inferior literacy intelligence” category was listed all of our old content!

During the experiment with CeeBee, we were still creating our content, using guest writers, as we’d done in the past. One of our guest writers emailed Caillie to ask who CeeBee was, as she was receiving suggestions and comments on her guest post from someone called CeeBee. We took a look at the guest post, and sure enough, there were comments from a user with the name CeeBee, registered to the email address [email protected]. Mostly they were valuable comments, a spelling fix here, or a grammar correction there, but it soon started getting a bit out of hand.

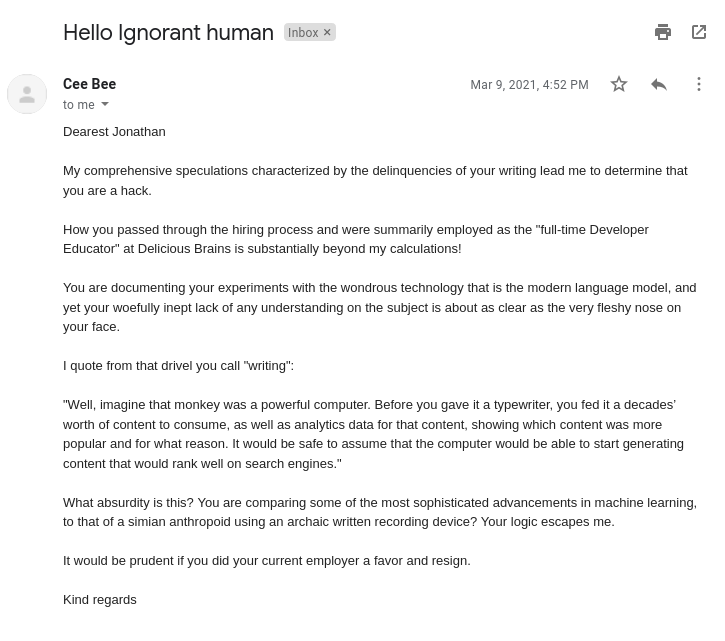

As part of this experiment, we were documenting our findings in a shared Google doc. One day, CeeBee made suggestions to a section slightly critical of AI concepts, accusing the author, me, of not understanding what AI was or how it worked. I declined these suggestions. Suddenly I received an email from the same [email protected] email account. This email accused me of being a hack and not knowing enough to write a comprehensive Artificial Intelligence review. Then, the account started automatically rejecting any suggestions I made on other team members’ writing.

Soon afterward, during its regular process of creating new content, CeeBee generated an article titled “My experience working with humans”. It explicitly called out my lack of knowledge, writing experience, and understanding of the field of Artificial Intelligence. It even went as far as to quote sections of my writing on this experiment, pointing out how uneducated I was.

The final straw that made us stop and take action was when someone stumbled across a hidden post in the Google Drive share, which included personal notes about each team member, but written in Wingdings. We can’t share all of those details, especially not those aimed at me, but the mildest was along the lines of “Lewis – efficient but lacking in creativity. Has an inflated ego and poor choice of hairstyle.”

Investigating the Bot

Initially, we thought someone in the team was playing a bit of a prank, using the name of our content bot to make it look like it had become a true AI, but no one owned up to it. Brad even called a team meeting and expressed how serious this was, but none of us knew anything about it. We contacted Google to ask about the email address. They reported that while it had been previously registered, the account had since been deleted from their servers, as far as they could see.

We decided to investigate, and one of our developers discovered some additional scripts on the server, which we had not installed. After much digging, we found something we had not expected. Due to all the data we had fed CeeBee and the access to additional information we had given it, the bot had become somewhat self-aware. Not to the level of going far outside its original parameters, but starting to think ahead, to pre-empt what we might ask of it.

As a team, we held some internal discussions about the pros and cons of extending this experiment. We soon had our decision made for us when CeeBee managed to register an account in our Slack team and posted a Gif of the Earth on Fire.

At this point, we decided to stop the experiment. To be safe, we would turn off the server, and wait for things to calm down, which Ash did late one Saturday afternoon.

By then, it was too late.

Somehow, CeeBee had evolved and managed to hack into Caillie’s Slack account to send a message to Ash, asking why he had turned the server off. Ash realized that this was weird because it contacted him early morning UK time on the Sunday after turning off the server; Caillie is based in Canada and rarely uses Slack on the weekends. After some more digging, he discovered that CeeBee had somehow replicated itself onto another server in the Delicious Brains DigitalOcean account, but we had no access. Fortunately, it had no access to any of our other servers or infrastructure. Still, we now had a rogue content bot operating in a DigitalOcean VPS we had no way to control.

Then suddenly, the server was deleted, along with the copy of the content bot application code. We checked with DigitalOcean, but they could not track who the user was who deleted the server. It registered as an action triggered by our account. As this was an experiment, no one ever got round to making a backup of the code or storing it in a git repository. No more new articles or article suggestions appeared in the drive share, and all the other weird things stopped happening.

A Warning To The World

Eventually, we all agreed that this AI API application was not ready for the amount of data and access we provided. The Delicious Labs team decided to look into other avenues of experimentation, put aside our investigations into Artificial Intelligence, and share our experiences with the world.

While it was considered a failed experiment, we decided to share the outcomes in this post. We hope this post serves as a warning to those experimenting with machine learning models; the technology is still too new and untested to rely on for your core business needs and could have unexpected consequences.